Data Driven 2D-to-3D Video Conversion for Soccer

Publication

IEEE Transactions on Multimedia

Authors

Kiana Calagari, Mohamed Elgharib, Piotr Didyk, Alexandre Kaspar, Wojciech Matusik, Mohamed Hefeeda

Abstract

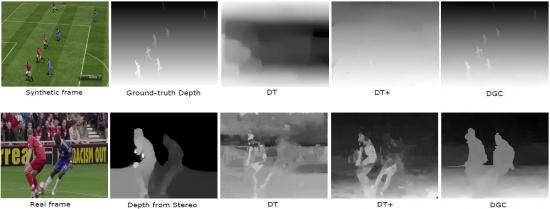

A wide adoption of 3D videos is hindered by the lack of high-quality 3D content. One promising solution to this problem is through data-driven 2D-to-3D video conversion. Such approaches are based on learning depth maps from a large dataset of 2D+Depth images. However, current conversion methods, while general, produce low-quality results with artifacts that are not acceptable to many viewers. We propose a novel, data-driven, method for 2D-to-3D video conversion. Our method transfers the depth gradients from a large database of 2D+Depth images. Capturing 2D+Depth databases, however, are complex and costly especially for outdoors sports games. We address this problem by creating a synthetic database from computer games and showing that this synthetic database can effectively be used to convert real videos. We propose a spatio-temporal method to ensure the smoothness of the generated depth within individual frames and across successive frames. In addition, we present an object boundary detection method customized for 2D-to-3D conversion systems, which produces clear depth boundaries for players. We implement our method and validate it by conducting user-studies that evaluate depth perception and visual comfort of the converted 3D videos. We show that our method produces highquality 3D videos that are almost indistinguishable from videos shot by stereo cameras. In addition, our method significantly outperforms the current state-of-the-art method. For example, up to 20% improvement in the perceived depth is achieved by our method, which translates to improving the mean opinion score from Good to Excellent.